|

|

|

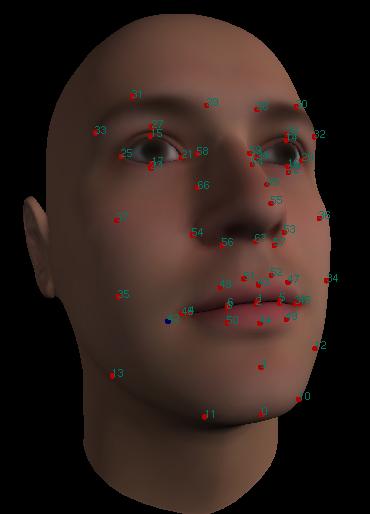

| Face and Control Points | Example of second method |

The aim of this project was to animate a virtual head using a subset of MPEG-4 Feature points to control the face (shown in red on the diagram). These points were themselves animated using a FAP stream (a changing set of positions for the points), and the main goal of the assignment was to implement different techniques for modifying the face as each MPEG-4 point moved.

In order to achieve this it was necessary to develop an algorithm that could decide which vertices in the mesh were affected by which control points, and to decide how much influence a particular control point had on a vertex.

For the first part of the assignment a spherical proximity test was used to determine which vertices a control point affected, and the influence a control point had on a vertex was determined as a function of the distance between the vertex and the control point. The main issue with this technique was that it did not deal with discontinuities in the mesh such as the mouth or eyes, in a very useful manner. For example a control point on the top lip, would control vertices on both the top and bottom lip making it extremely difficult to open the mouth.

In the second part of the assignment this problem was addressed by calculating the vertices a control point affected by traversing the mesh and adding up the lengths of the edges traversed, and using this as a measure of the distance a vertex was from a control point. This technique could them be used to open the mouth more easily as the control point on the top lip would not affect vertices on the bottom lip as the distance to them would be calculated by traversing around the mouth.